How to use data import templates

With Data Pump, you don’t have to waste time configuring the same settings each time you want to import your data. You can configure them once, save them to a custom template file, and reuse them whenever it is required - for instance, if you need to perform daily import operations.

How to create a template

1. First, you need to configure the settings to be saved as a template. In Object Explorer, right-click a database, point to Data Pump, and click Import Data.

2. The actual configuration of settings may differ, depending on the format. You will find the full workflow for each format in the corresponding topic:

- Text

- Microsoft Excel (XLS)

- Microsoft Excel 2007+ (XLSX)

- Microsoft Access

- XML

- JSON

- CSV

- ODBC

- DBF

- Google Sheets

3. Once you have configured the settings for your future template in the wizard, click Save Template.

4. Specify the name and the location of your template file in the Save As dialog box and click Save.

That’s it! Now let’s see how effortless it is to load your template.

How to load a template

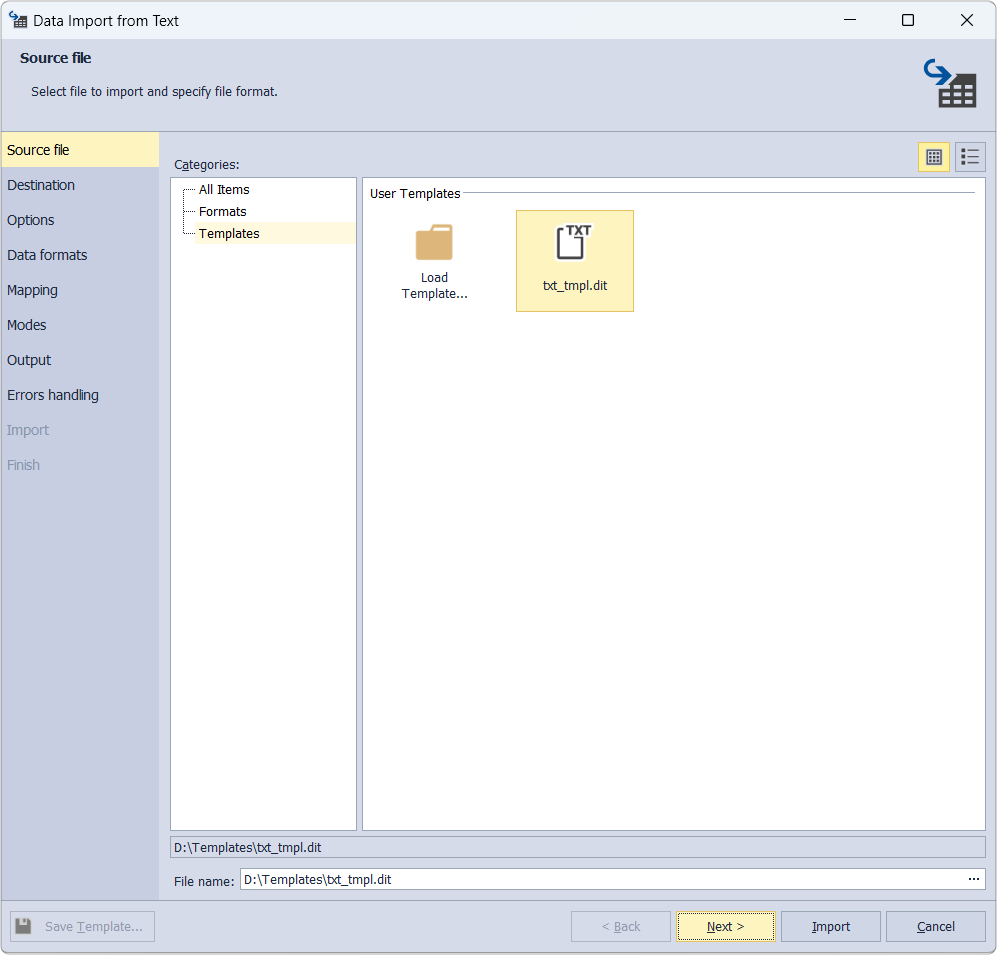

1. Once more, go to Object Explorer, right-click a database, point to Data Pump, and click Import Data to proceed to the Source file page.

2. In Categories, select Templates. Then find the saved template among User Templates and select it. In our example, it is txt_tmpl.dit.

3. Click Next. On the Destination page, you can modify the connection, database and its schema, table(s) and view(s). Also, if you want the template to be imported into a new table, then select New table and enter the table name.

To import to one of the existing tables, select Existing table and click the desired one.

As soon as you’re done, click Next.

4. On the Options page, you can configure the formatting options for imported data. They are:

-

Encoding allows setting the required encoding.

-

Quote string enables you to enter the character that will be used for string quoting.

-

Skip lines lets adjust the quantity of lines to be skipped during the import process. The lines are counted from the top.

-

Header position permits you to specify the position of the header. It will be highlighted with blue in the Preview section. If you don’t specify the header position, the imported columns will get default names - column1, column2, etc.

-

Import even lines only allows importing only even lines.

-

Manual makes it possible to add manual splitting. Go to the Preview section and double-click the required places to set column ranges. The markers will appear. You can move them forward or backward to change the column length. To remove a marker, double-click it.

-

Fixed width lets configure the splitting width.

-

Text Separator permits choosing the required option for the text separator.

When you finish with the page, click Next.

5. On Data formats, there are two tabs: Common Formats and Column Settings.

On the Common Formats tab, you can can configure the formats for null strings, thousand and decimal separators, boolean variables, date and time. There is also the Autodetect Date and Time format checkbox, selected by default.

Column Settings allows you to specify the format settings for separate columns. You have 4 options here: Null String, Left Quote, Right Quote, and Date and Time. Note that if a format mask is not set, the application will identify date/time values automatically.

After you have configured the settings, click Next.

6. On the Mapping page, you can map the source columns to the target ones. If you are importing data into a new table, the application will automatically create and map all the columns.

Note

If you are importing into an existing table, only columns with the same names will be mapped, and the rest should be mapped manually. If no columns with the same name are found, they are mapped in succession - the 1st column in Source with the 1st column in Target, etc.

Note

You should select at least one column with a primary key by selecting the corresponding Key checkbox. Otherwise, some of the import modes on the Modes page will be disabled.

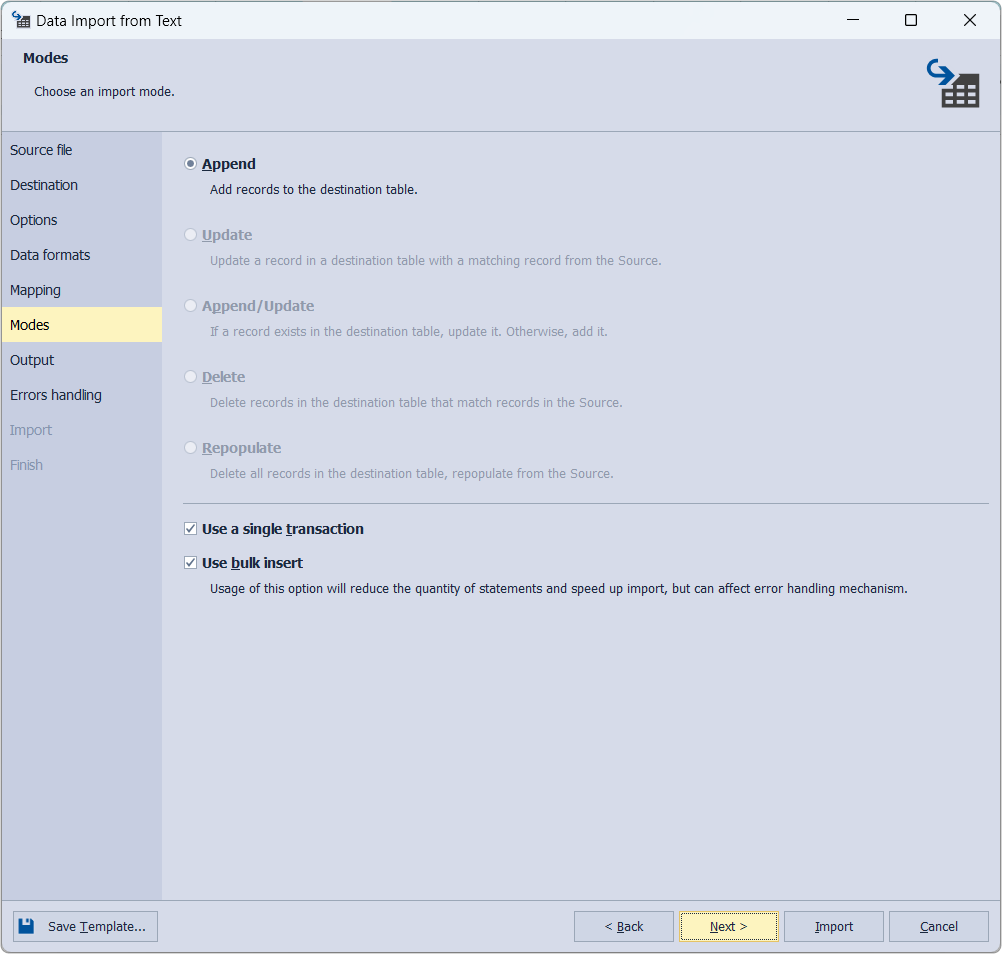

7. The Modes page includes five options for configuring the import mode. They are:

-

Append lets add records to the target table.

-

Update allows you to update a record in the target table with a matching record from the source.

-

Append/Update permits renewing a record if it exists in the target table; otherwise, adding a record.

-

Delete enables you to delete records in the target table that match records in the source.

-

Repopulate allows you to delete all records in the target table and repopulate them from the source.

Optionally, you can select Use a single transaction and Use bulk insert (the latter of which reduces the number of statements and speeds up import but can affect the error handling mechanism).

After completing the page, click Next.

8. On the Output page, you have 3 options:

- Open the data import script in the internal editor.

- Save the data import script to a file. To use this option, specify the path and the file name. Additionally, you can select the checkboxes to Add timestamp to the file name and Open in the internal editor.

- Import data directly to the database.

To go to the next page, click Next.

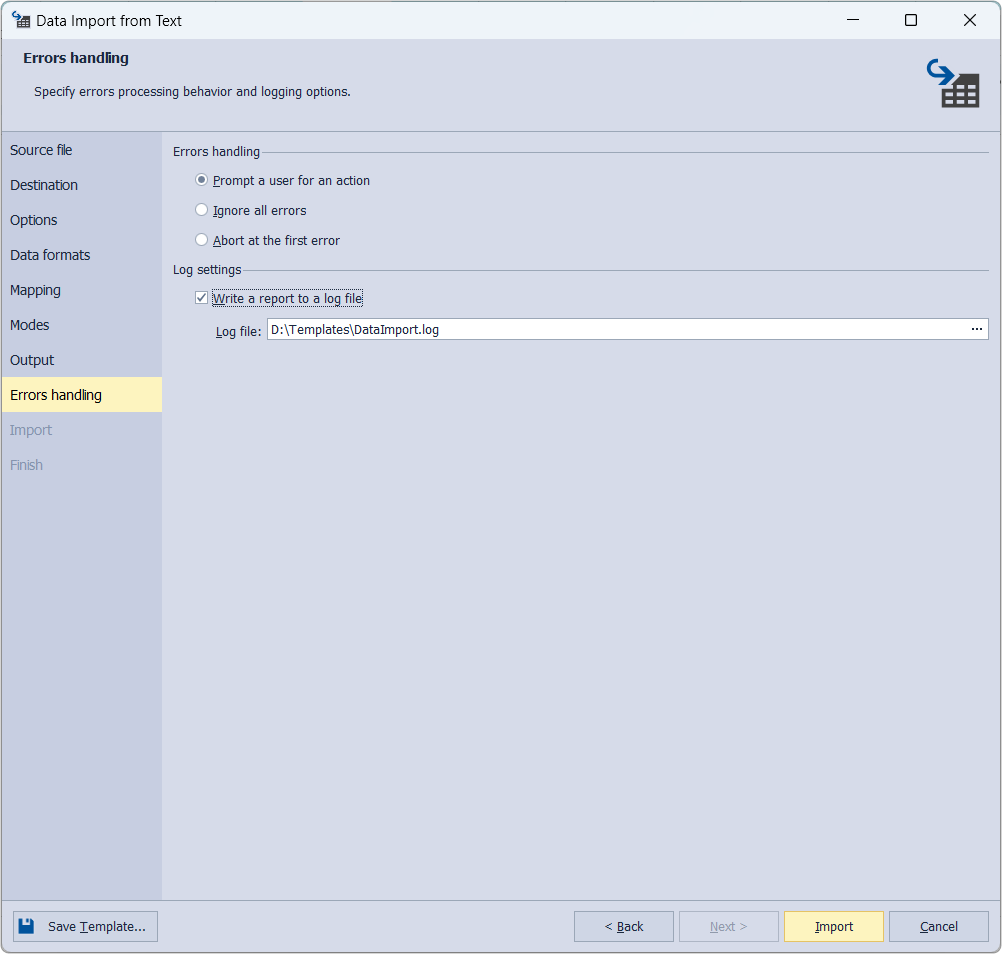

9. On the Errors handling page, it’s possible to customize the following options:

-

Prompt a user for an action empowers you to require a user’s input in case of some errors while the import process.

-

Ignore all errors lets skip all errors.

-

Abort at the first error allows you to stop the import process at the first error.

-

Write a report to a log file permits logging the import process and setting a destination path to a log file.

Finally, click Import.

9. When the import process is finished, click Finish.

Note

With templates at hand, you can automate recurring data import operations using the command line.

See also How to Build a Job to Import Multiple CSV files into a SQL Server Database