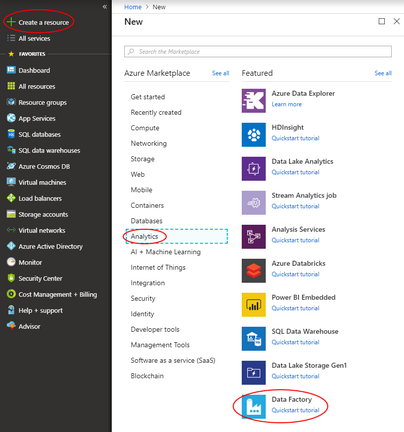

Azure Data Factory is a cloud data integration service for creating data-driven workflows, automating data movement and data transformation. It allows users to create and schedule data-driven workflows (called pipelines) that can collect data from various data sources, process and transform them, and publish output data to data stores, like Azure Synapse Analytics.

SInce v2, Azure Data Factory supports deployment of SSIS Data Flow Component projects, using custom SSIS Components, including Devart SSIS Data Flow Components. This topic will guide you in configuring your Azure Data Factory and all the necessary features in Azure Cloud and deploying your Integration Services packages, using Devart SSIS Data Flow Components, to Azure Data Factory.

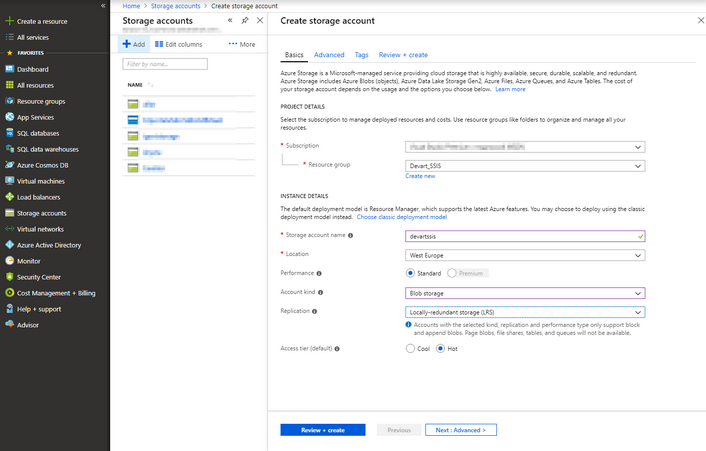

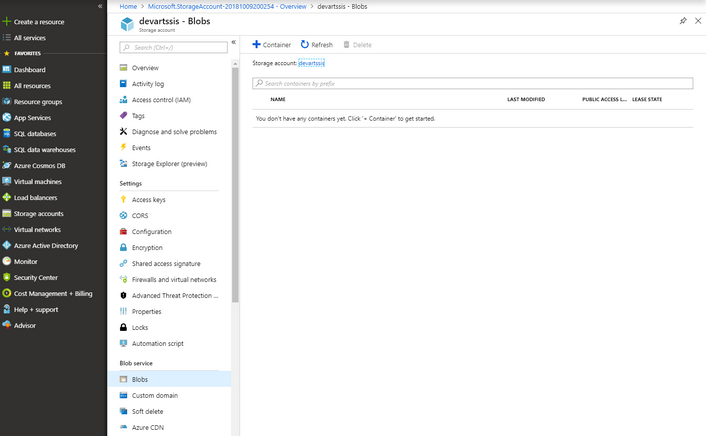

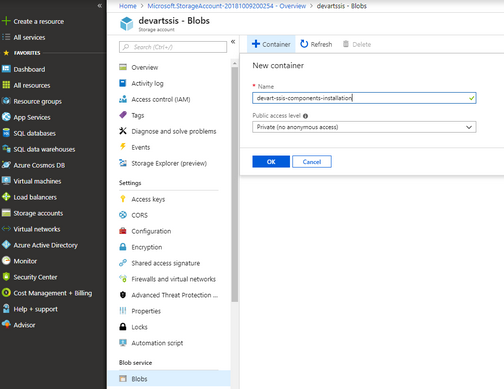

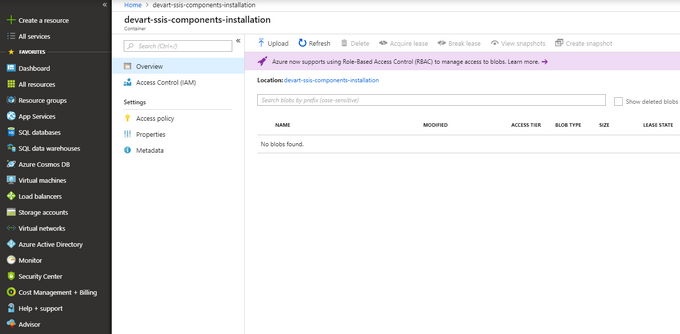

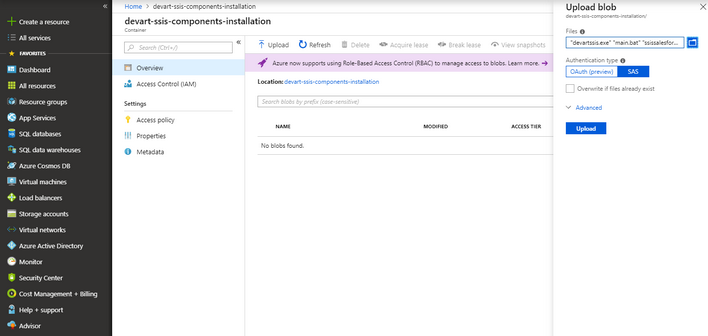

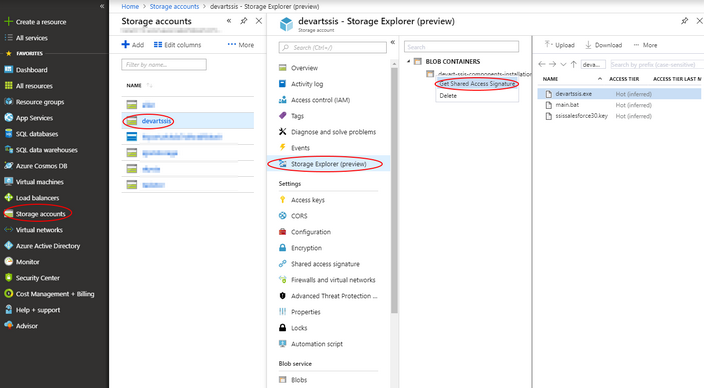

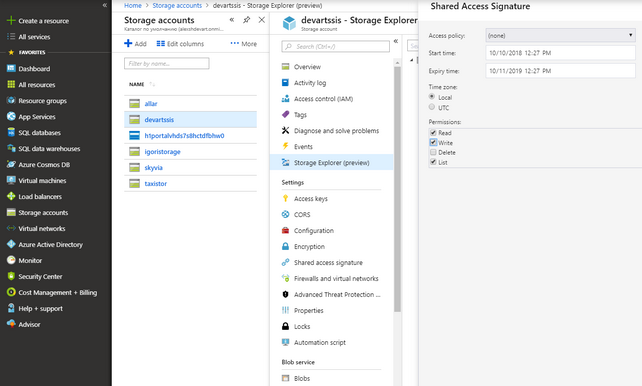

In brief, to deploy a third-party SSIS components on Azure Data Factory, you need to place all the necessary files (like installer of these custom components) to an Azure Storage Blob container, together with a command file with the fixed name main.cmd. This command file should perform the necessary actions for custom SSIS components deployment during Integration Runtime creation. This Azure Storage Blob container should be referenced in the IR creation process with a Shared Access Signature URI. The main.cmd file will be run every time whenever an Integration Runtime node is instantiated. For Devart SSIS Data Flow Components, you should place the following files to the Azure Storage Blob container:

devartssis.exe /azure /silent /log=%CUSTOM_SETUP_SCRIPT_LOG_DIR%\install.log /COMPONENTS="crm\salesforce" For other data sources, you can use the following values of the /COMPONENTS parameter:

Below you can find a detailed tutorial on configuring Azure Data Factory and deploying all the necessary files and components to it. |

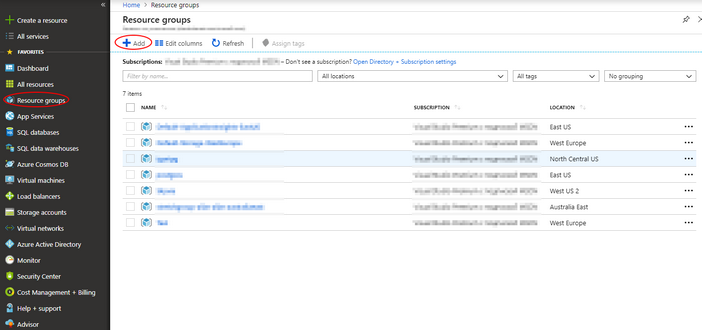

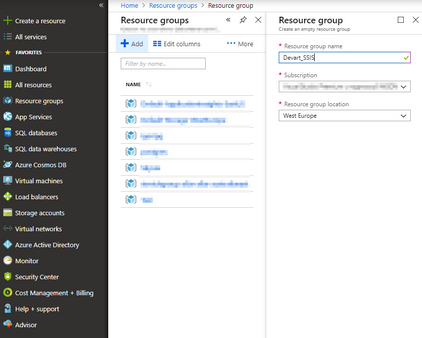

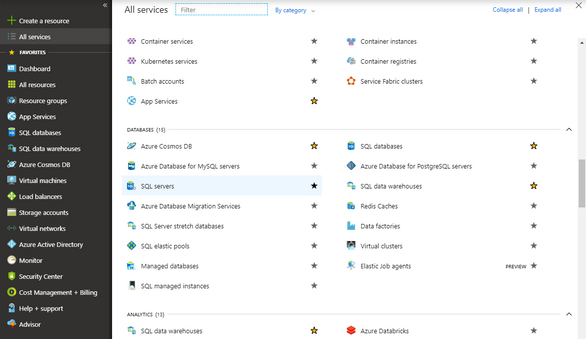

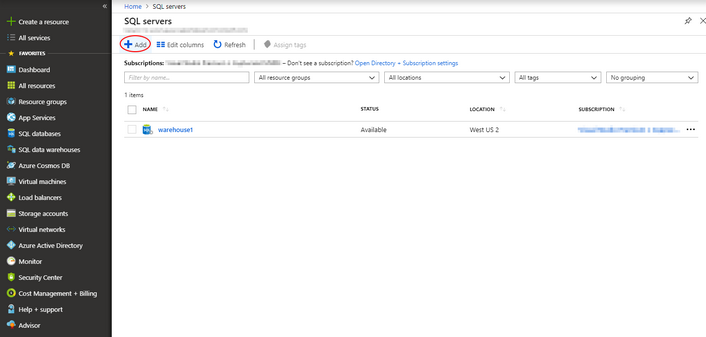

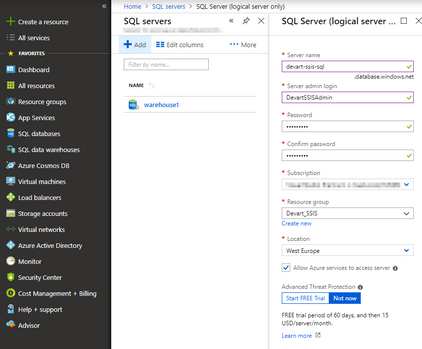

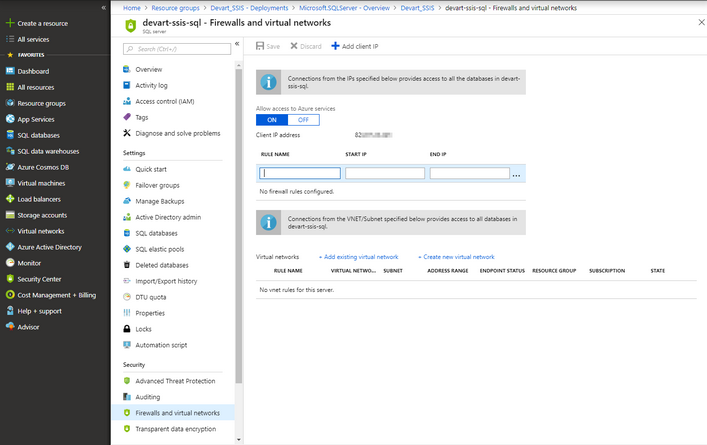

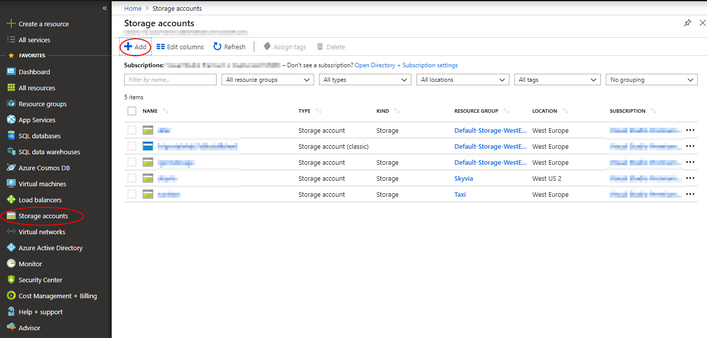

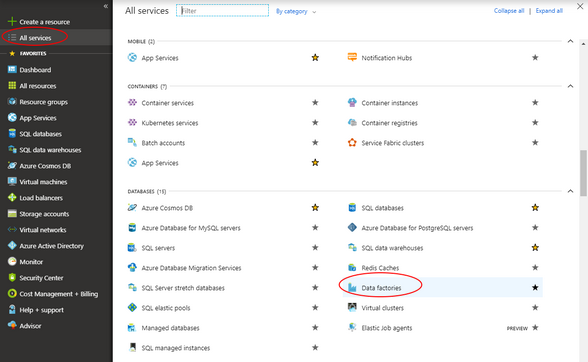

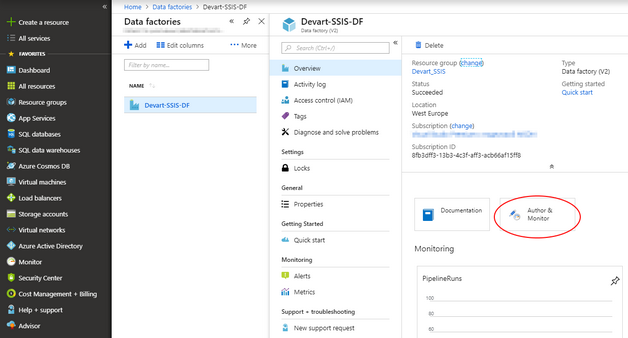

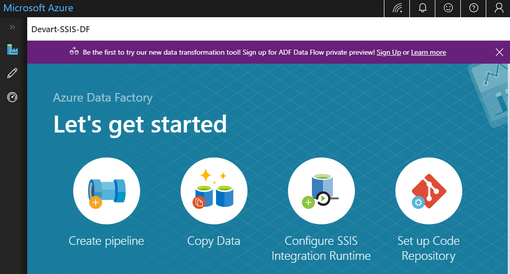

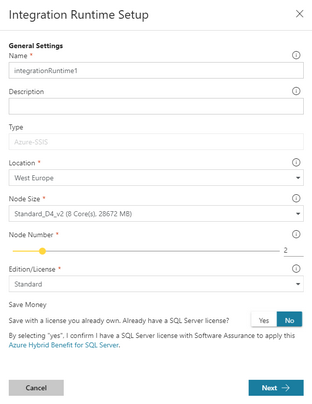

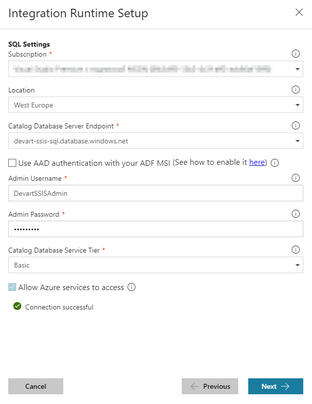

Azure Data Factory Configuration

Azure Data Factory Configuration

|

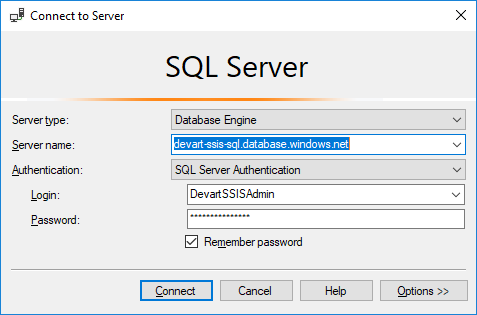

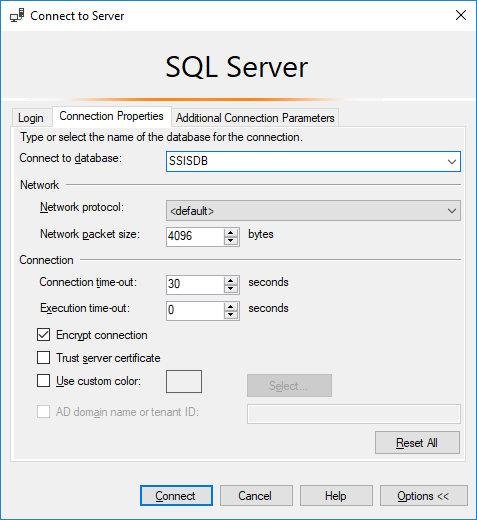

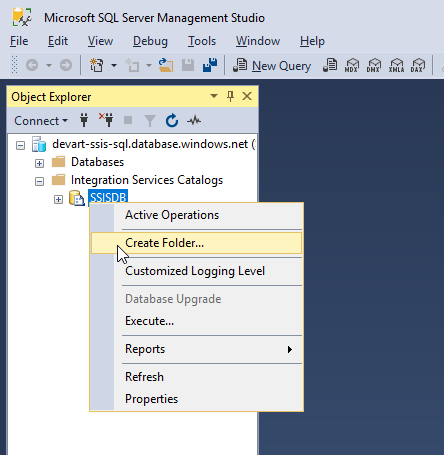

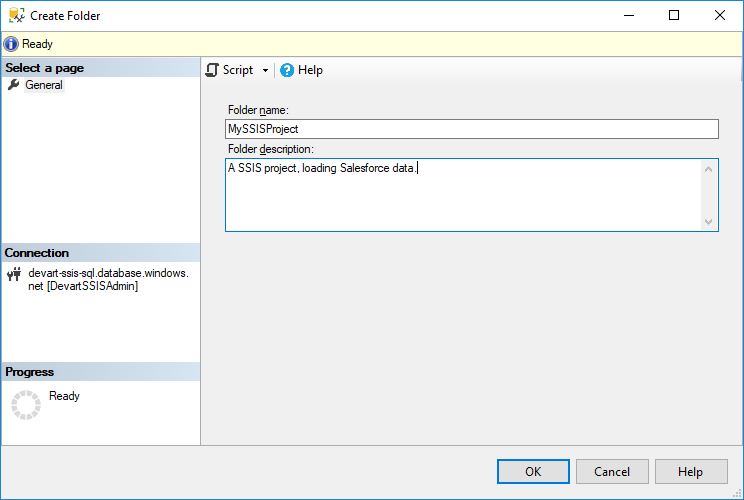

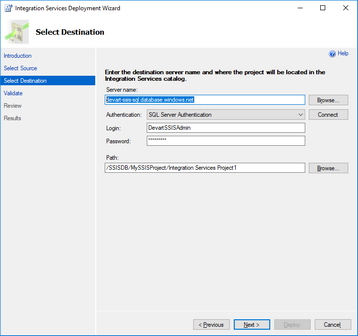

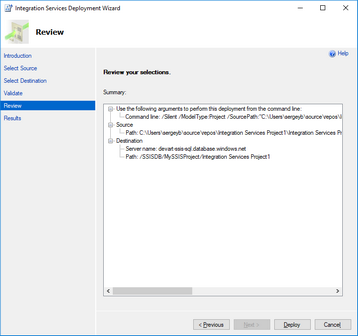

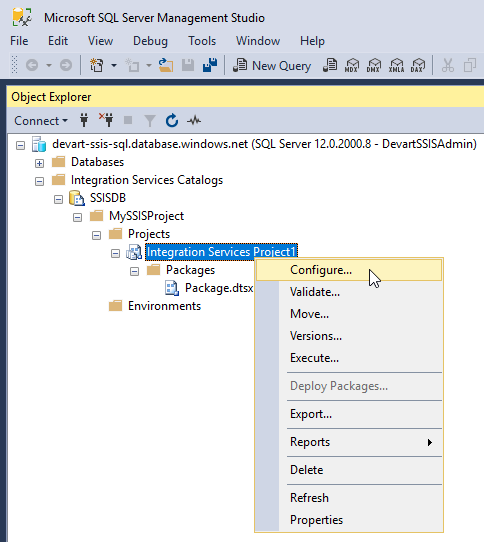

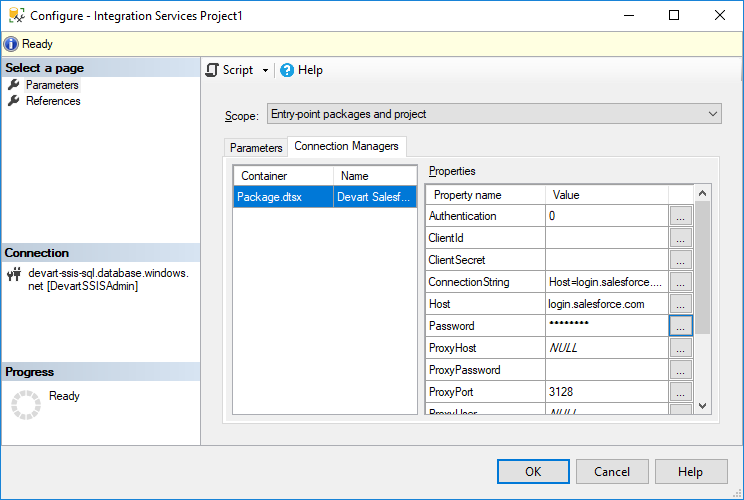

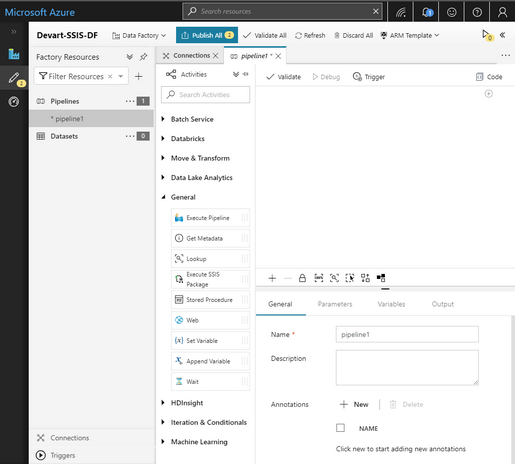

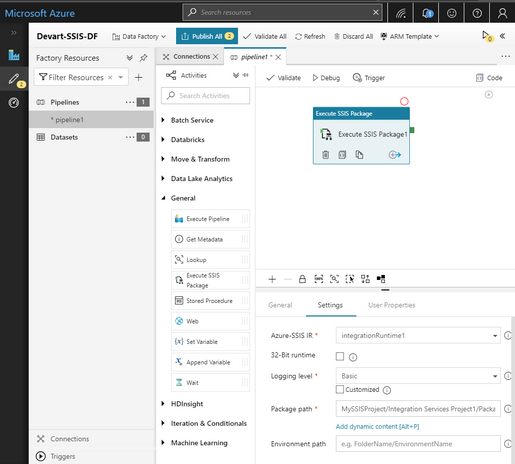

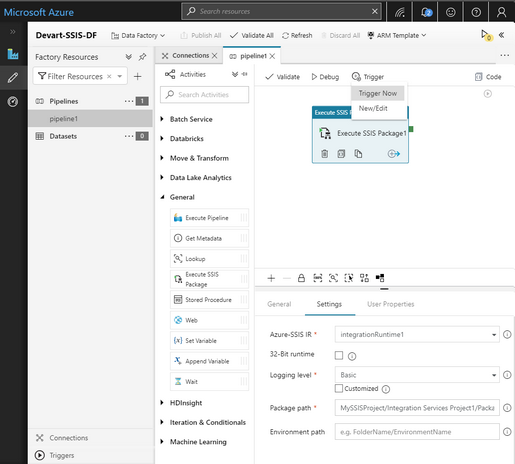

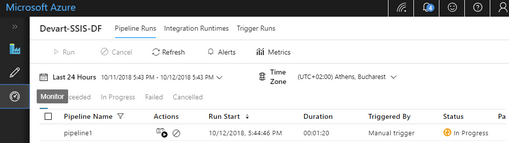

Deploying SSIS Packages on Azure Data Factory

Deploying SSIS Packages on Azure Data Factory

There are different ways to deploy and run an Integration Services package, deployed on the Azure SQL Server. You can see more details about it in Microsoft documentation. In this tutorial, we deploy it directly from Visual Studio and use Azure Data Factory pipeline to run the package. See also more details here: https://docs.microsoft.com/en-us/azure/data-factory/how-to-invoke-ssis-package-ssis-activity

|